Referências

Deng, Jia, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei.

2009. “Imagenet: A Large-Scale Hierarchical Image

Database.” In 2009 IEEE Conference on Computer Vision and

Pattern Recognition, 248–55. Ieee.

Devlin, Jacob, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019.

“BERT: Pre-Training of Deep Bidirectional Transformers for

Language Understanding.” In Proceedings of the North American

Chapter of the Association for Computational Linguistics (NAACL).

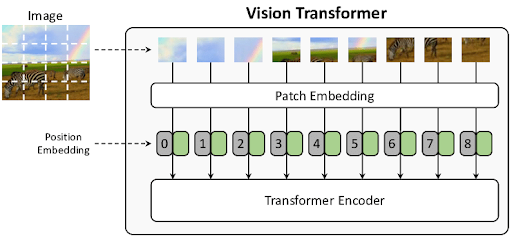

Dosovitskiy, Alexey, Lucas Beyer, Alexander Kolesnikov, Dirk

Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, et al.

2020. “An Image Is Worth 16x16 Words: Transformers for Image

Recognition at Scale.” arXiv Preprint arXiv:2010.11929.

Kolesnikov, Alexander, Lucas Beyer, Xiaohua Zhai, Joan Puigcerver,

Jessica Yung, Sylvain Gelly, and Neil Houlsby. 2020. “Big Transfer

(BiT): General Visual Representation Learning.” In European

Conference on Computer Vision (ECCV).

Krizhevsky, Alex, Geoffrey Hinton, et al. 2009. “Learning Multiple

Layers of Features from Tiny Images.”

Roy, Prasun, Subhankar Ghosh, Saumik Bhattacharya, and Umapada Pal.

2018. “Effects of Degradations on Deep Neural Network

Architectures.” arXiv Preprint arXiv:1807.10108.

Sabour, Sara, Nicholas Frosst, and Geoffrey E Hinton. 2017.

“Dynamic Routing Between Capsules.” Advances in Neural

Information Processing Systems 30.

Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion

Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017.

“Attention Is All You Need.” In Advances in Neural

Information Processing Systems (NIPS).